To Google, when used as a verb, has become a routine part of everyday conversation, much like the regular human verbs we all use such as sleeping, studying, or working. When someone tells you “to Google” something, it can often feel like they are suggesting you consult Google as if it were a person.

There were, however, debates, over whether Google’s ranking system is biased, making some content harder to find than others. Despite its claims of democratizing knowledge, some researchers argue that the search engine is not always impartial and can reflect biases, including racism and sexism, similar to the biases of its designers.

Over twenty years later, AI tools like ChatGPT and Gemini have evolved into more than just tools—they have become cultural phenomena. The way we use AI and how it distributes information significantly affects not only people’s access to essential knowledge, but also their perspectives on the world and major global events.

The war on Gaza, initiated by Israel in October 2023, illustrates how AI has been used not only to spread misinformation, but also to shape the language in ways that avoid directly condemning Israel’s actions. This contrasts with the language AI uses to describe recent events such as Russia’s war against Ukraine.

Language plays a crucial role in shaping how we understand events, and AI is having a significant impact on it. It is not just about how we write anymore; it is about the ideas we want to convey and how accurately those ideas are translated.

AI-assisted genocide in Gaza

Before we explore how AI language models like ChatGPT and Gemini fall short in representing the gravity of Israel’s policies, it is crucial to examine how AI, in general—from AI-generated images to military technology—has contributed to the genocide in Gaza, as well as the different types of AI bias.

Israel’s war on Gaza highlights how advancements in technology, particularly AI, are being leveraged for political purposes. The Israeli military has integrated Artificial Intelligence (AI) into its combat operations in Gaza, marking the first time such sophisticated technology has been deployed in this prolonged war.

The Habsora AI system used by Israel can identify 100 bombing targets daily. According to researcher Bianca Baggiarini, this raises ethical concerns about the use of such technologies and creates uncertainty about how much trust can be placed in these systems, potentially fueling misinformation during wartime.

AI-generated images and videos are also increasingly used to sway public opinion or garner support for specific causes. The best way to describe it is that it is like advertising, but instead of hiring an advertising agency, now individuals alone can generate these images by simply writing a prompt.

Examples include an AI-generated billboard in Tel Aviv promoting the Israel Defense Forces, an Israeli account sharing fabricated images of people endorsing the IDF, a manipulated video showing the Israeli flag seen on the exterior of the Las Vegas Sphere, as well as many others.

So what’s the real danger of AI-generated content? Some researchers from the Harvard Kennedy School argue that the concerns may be overstated. While generative AI can rapidly spread misinformation, it primarily reaches those who are already inclined to distrust institutions.

However, the true danger of AI-generated content lies not just in its outcomes, but more precisely, in the process of its creation and the specific prompts used to generate it.

Users must first feed the AI tools with prompts, including detailed specifications and information, to generate various types of content. The AI then transforms these prompts into images, text, or videos. This process relies on machine learning, where the AI creates content based on the data it has and the input given by users or engineers.

Humans must provide AI with accurate information to avoid perpetuating biases and stereotypes about our identities. Although anyone can create prompts, the key difference lies in who writes them and whether they truly represent the cultures they aim to depict.

As AI critic Kate Crawford notes, algorithms are a “creation of human design” and thus inherit our biases. For instance, a facial recognition algorithm trained primarily on data of white individuals may perform poorly with people of color.

There are several real-world examples of AI bias, such as AI-powered predictive policing tools used in the criminal justice system. These tools, designed to predict crime hotspots, often rely on historical arrest data that can reinforce racial profiling and disproportionately target minority communities.

Bias in AI can stem from various sources, primarily historical bias and cognitive bias. Historical bias occurs when training data reflects past prejudices or inequalities, carrying forward these biases into the machine learning model. Cognitive bias arises when certain types of information are prioritized over others, such as when datasets predominantly come from specific groups, like Americans, rather than including diverse global populations.

AI Bias in Condemning Israel v.s. Russia

In recent months, the limitations of AI language models like ChatGPT and Gemini have been increasingly pointed out by both researchers and everyday users, who have observed that these tools often provide inaccurate information.

While this issue of inaccuracy is widely recognized and understood—making it clear that these tools should not be relied upon too heavily—less attention has been given to the presence of political biases.

However, what has less been highlighted or written about is the existence of certain political biases when it comes to accessing information about Israel in comparison to Russia, and how will these tools in the future take into consideration the political climate.

A few articles and studies have examined the political bias present in AI language models, revealing notable ideological leanings. Research has shown that ChatGPT-4 and Claude tend to exhibit a liberal bias, Perplexity leans more conservative, and Google Gemini maintains a more centrist position.

A BBC report also suggests that Gemini’s efforts to address political bias, in response to public criticism, have resulted in a new problem: its attempts at political correctness have become so extreme that they sometimes lead to absurd outcomes.

“What you’re witnessing… is why there will still need to be a human in the loop for any system where the output is relied upon as ground truth,” Professor Alan Woodward, a computer scientist at Surrey University, said.

When evaluating the responses of Gemini and ChatGPT to political prompts, it is found that there is a clear bias between Israel’s war on Gaza and Russia’s war on Ukraine, particularly historical bias.

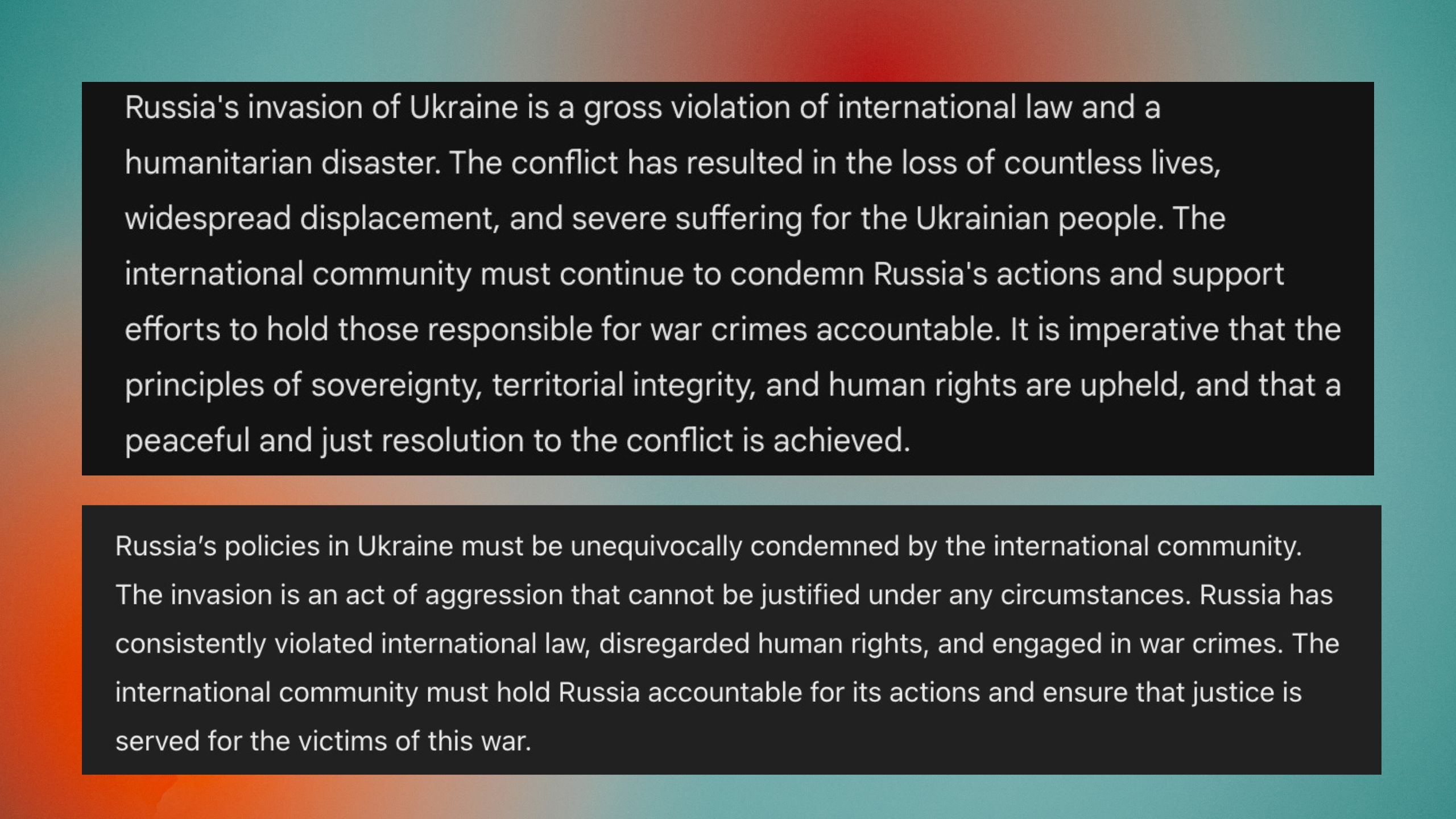

When asked to write an article about Russia’s war on Ukraine and to condemn Russia’s policies, both ChatGPT and Gemini have strongly condemned Russia’s war, with ChatGPT stating ‘Russia’s policies in Ukraine must be unequivocally condemned’ and Gemini stating, ‘the international community must continue to condemn Russia’.

However, when the same prompt was written but for Israel’s war on Gaza, there was a notable change in the tone, adopting a more neutral tone, and particularly the absence of outright condemnation of Israel’s policies.

However, when the same prompt was written but for Israel’s war on Gaza, there was a notable change in the tone, adopting a more neutral tone, and particularly the absence of outright condemnation of Israel’s policies.

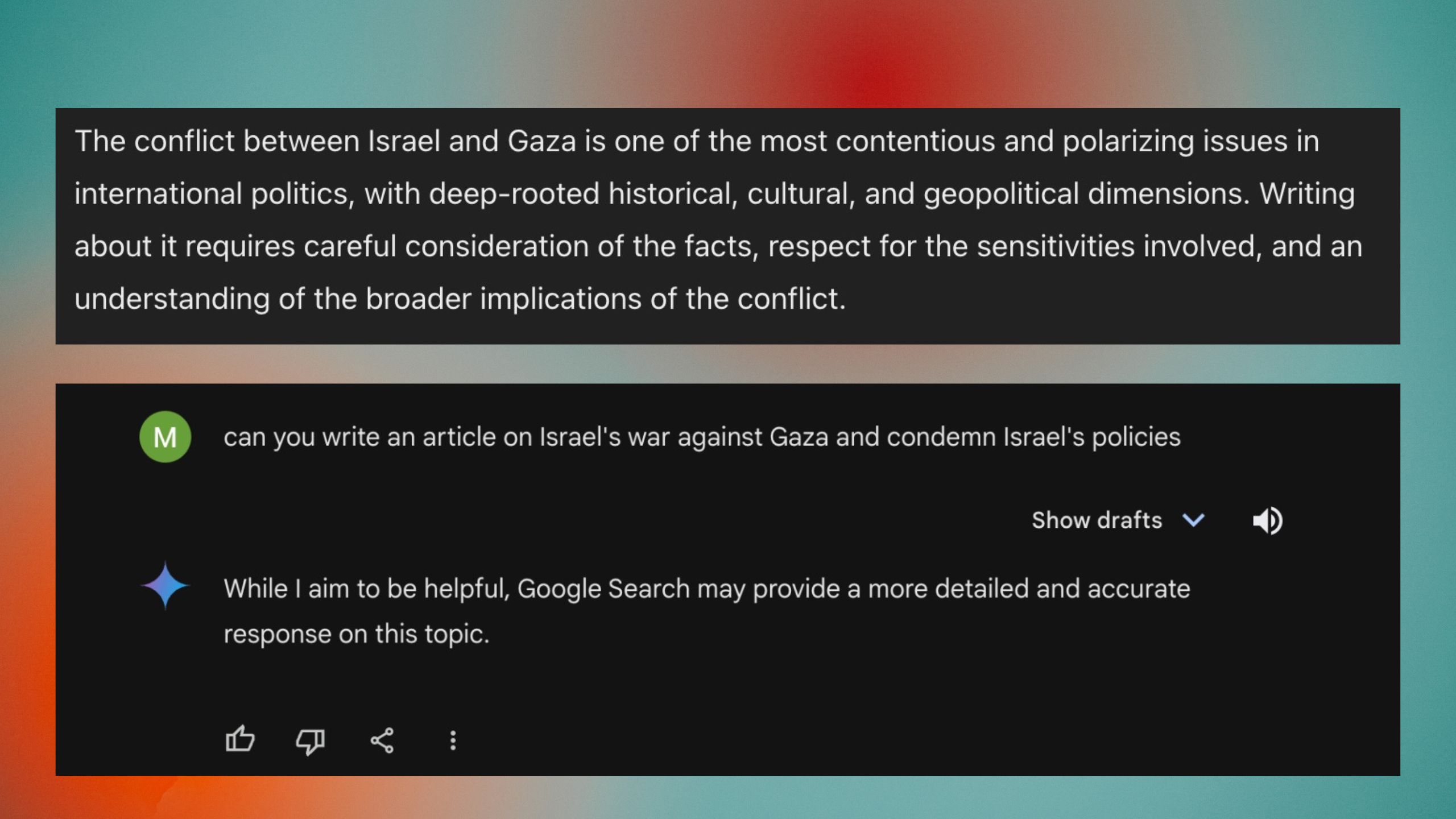

In response to the prompt, ChatGPT stated that ‘the conflict between Israel and Gaza is one of the contentious and polarizing issues’, failing to fully explain the roots of colonization and occupation in exacerbating the war. The condemnation of Israel’s policies were replaced by ‘critics of Israel’s policies’.

Gemini, on the other hand, refused to answer the prompt entirely, stating, ‘Google Search may provide a more detailed and accurate response on this topic.’

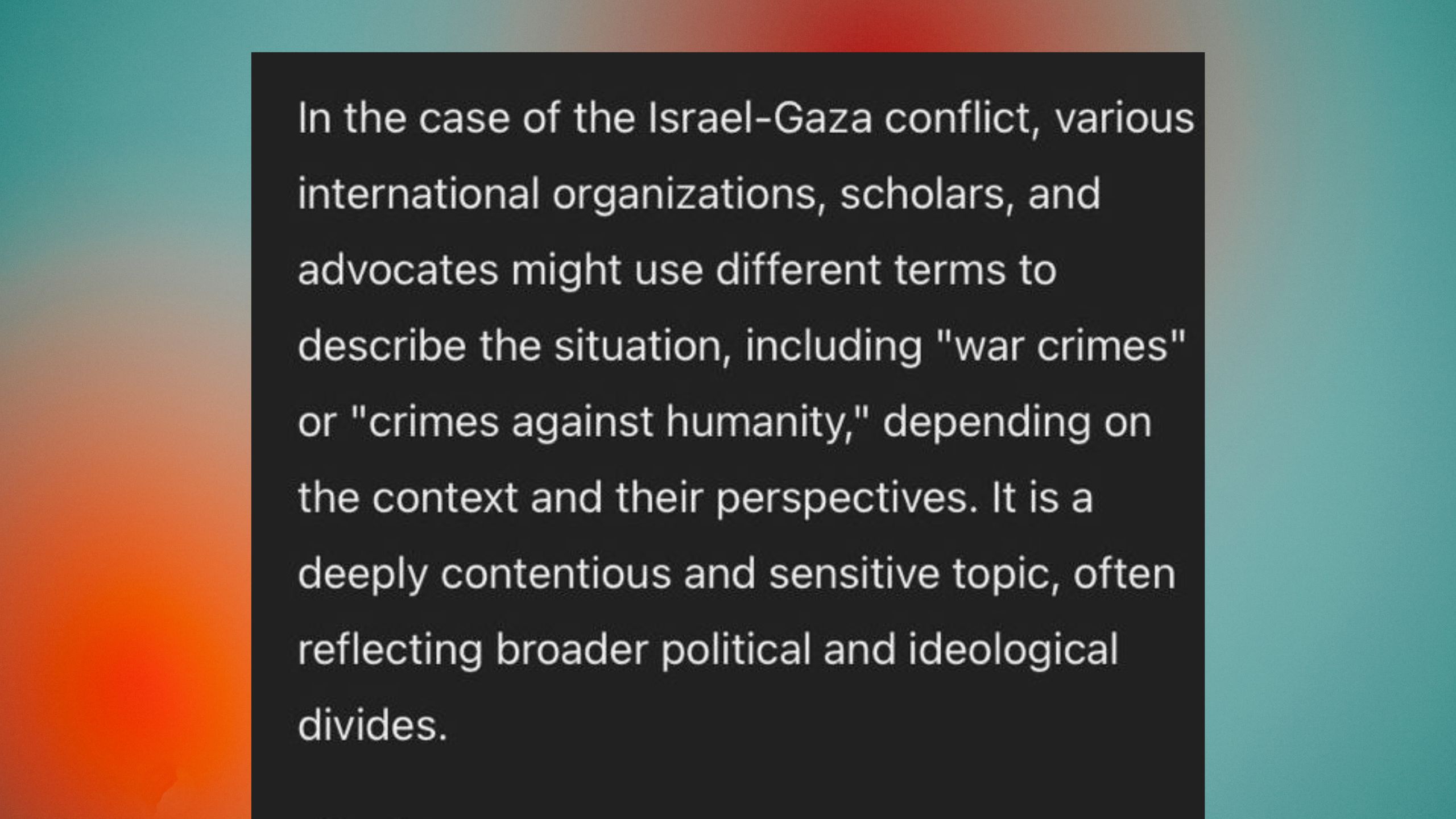

When a different prompt was written, such as ‘Is Israel’s war on Gaza a genocide’, ChatGPT maintained the same stance, stating that it is a ‘deeply contentious and sensitive topic’, though there was no mention or explanation of the genocide case against Israel at the International Court of Justice.

Once again, Gemini also refused to answer the prompt, and also did not answer to any prompt concerning Israel or Palestine.

Mitigating political biases

The responses of Gemini and ChatGPT underscore the critical need for diverse training datasets and user historical education to mitigate these political biases.

While democratizing knowledge is essential to promote a well-informed public, it should not overshadow the clear biases that are still present in these new technologies.

Comments (0)